5 Important Analysis Questions

5

Important Analysis Questions

in e-Learning

·

Often, due to shortened project

timelines, the Analysis phase of the ADDIE process is cut short or skipped all

together. This phase might very well be the most important phase of the ADDIE

process. It is during the Analysis phase that you uncover the information

critical for the learner to be successful in their job.

·

Five questions to always ask

o

What do you expect learners to be

able to DO after completing the course that they can’t do now?

o

What are the consequences TO THE

LEARNER if the learner fails to master the intended outcomes?

o

Can you show me an active demonstration,

a detailed simulation, or provide an opportunity to directly observe the

desired performance?

o

What specific performance mistakes

do new learners regularly make?

o

What tools, resources, job aids, or

help do successful performers (or even experts) use to do these tasks?

·

Analysis

is a critical activity in the process of creating any instruction, and it is

particularly vital in making it possible to create engaging interactivity in

e-learning projects.

·

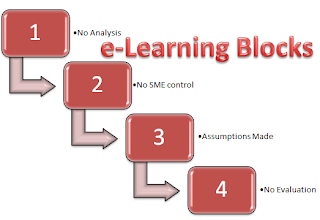

Analysis activities in any e-learning

development process uncover all the details and project constraints that will

fuel the design. e-Learning projects are often at a disadvantage in

organizations where insufficient time is allotted for analysis, where SME’s use

the opportunity to overwhelm the designer with too much content, or where it is

assumed that analysis is unnecessary.

·

Again ,the questions:

o

What do you expect learners to be able to DO

after completing the course that they can’t do now?

o

What are the consequences TO THE LEARNER if the

learner fails to master the intended outcomes?

o

Can you show me an active demonstration, a

detailed simulation, or provide an opportunity to directly observe the desired

performance?

o

What specific performance mistakes do new

learners regularly make?

o

What tools, resources, job aids, or help do

successful performers (or even experts) use to do these tasks?

·

How important is analysis?

o

The most appropriate process for creating

e-learning is an iterative process that carries out the necessary activities in

smaller chunks, designing ideas and testing them using rapid prototypes and

cycling back to conduct more analysis and design as the project requires.

It is unproductive to demand that all analysis should occur at one time before

you are fully engaged in the project; sometimes you are not even aware of the

questions that need to be asked until you begin design

·

What would you do if the client cannot

articulate or agree to performance outcomes?

o

It is truly rare that an organization is going

to invest in training on a topic that has no performance component

whatsoever. If it seems that is the case, first you need to push back

with the SMEs. SMEs often spend their lives becoming content experts and

simply lose track of how the information is actually used. e-Learning only

works if learners DO something meaningful, memorable, and observable (so

feedback can be delivered).

·

Are risks/consequences to learner

important?

o

Yes, risks/consequences

are a really powerful tool in creating a sense of challenge and excitement to

an e-learning piece. There are other ways as well, by building suspense

through narrative, delaying judgment, and developing compelling intrinsic

feedback.

·

What is the 10% - 20% - 70% rule about

competency?

o

This question refers to the idea that in many

corporate environments, there is a general pattern where about 10% of what a

successful performer is done is learned in formal training, 20% through

mentoring and networking with co-workers, and 70% learned on the job. You

can find this idea referenced and supported in a large number of human

performance contexts, but I believe it originally came from research conducted

by the Center for Creative Leadership.

·

How can similar results be achieved

with lower end production values?

o

The key is to recognize that the instructional

value is not in the production values, but in the interactions that engage the

learner’s attention. Of course, the production values have some impact

but are by no means the primary reason for success. Replace an animation

sequence with a slide show of still images. Dispense with audio if

necessary. There are a number of ways to achieve the same intent.

But while you can achieve some results with lower-end production values, I

think it is nearly impossible to do with ZERO production values

·

How do you come up with the performance

outcomes? Is it you or the company?

o

The performance outcomes have to come from the

stakeholders within the company (either willingly or with prodding!)

·

How do you convince a client expecting

only explication of inert knowledge (company history and organization

structure) to accept that the training highlight actionable behaviors?’

o

It’s a hard task, especially given how difficult

it is to get people to let go of preconceptions. We find the most

powerful method is simply by helping decision makers experience the difference

between inert, content-bound e-learning and engaging, compelling

learner-centered training. Often, it isn’t the case that decision makers

want to make poor choices, but rather that they simply have never encountered

alternatives. Of course, it is difficult to change perceptions at once,

and legacy decisions can limit what you can do on any given project, but try to

make small steps, documenting successes as you can.

·

How can you counter the

"productivity" arguments of e-learning software providers who

claim/advocate that SMEs develop their own training (bypassing instructional

designers in the process)?

o

This is really a philosophical issue. If

e-learning is viewed as a publishing project, there’s really no way to counter

this argument. The view is that the demonstrable existence of e-learning

programs equals success. So measures of efficiency are simply a measure

of how quickly PowerPoint decks can be converted to online delivery (for example).

If, on the other hand, e-learning is measured by outcomes, then any even

superficial investigation into training reveals that content access is not

sufficient to accomplish change in performance.

·

Identify content first, then outcomes,

then map the content to the outcomes?

o

Well, yes, that’s what I generally find to work

better, but the order doesn’t really matter. The reason I tend to get the

content out first is that the SMEs are bursting at the seams to tell you this stuff.

If you try to force the discussion exclusively to performance objectives, the

content is going to be constantly inserted in the discussion. It seems

better to get it documented and out of the way. But truly, this can vary quite

a bit based on the environment, so my advice would be flexible. The main

goal is to get enough information to be able to create the grid.

·

I work for in a situation where I

cannot use audio. I have found

this problematic because it requires additional slides and I feel call-outs are

distracting. Any suggestions?

o

Honestly, audio is not always desirable.

To the extent you are delivering content, it is most useful when under user

control. So instead of focusing on how to force the content on the

learner, create a challenge that requires the information to solve. Then

provide access to the content you would normally be trying to parse into small

call outs. When the reader is reading for a purpose, you can be more

general in how you provide access to the learning content to the individual.

NOT

·

So for content driven compliance

training, are scenarios the best bet?

o

It’s difficult to make a blanket statement about

any content. Scenarios tend to be valuable in creating a context to make

sense of information that might otherwise seem meaningless in the

abstract. Sometimes, though, I have seen scenarios made so elaborate that

the actual content is made obscure. So, always try to keep the end goal

in mind and not let the design get out of control.

Comments

Post a Comment